Light GBM

Before discussing how Light GBM works, let’s first understand why we need this algorithm when we have so many others (like the ones we have seen above). Light GBM beats all the other algorithms when the dataset is extremely large. Compared to the other algorithms, Light GBM takes lesser time to run on a huge dataset.

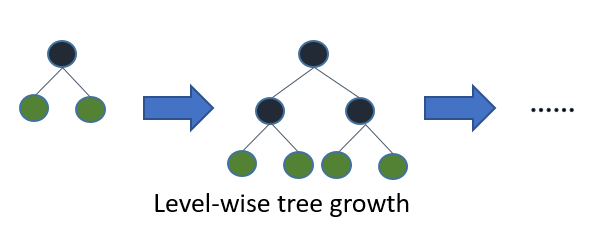

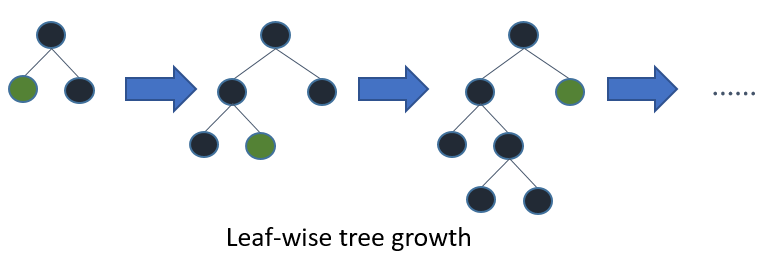

LightGBM is a gradient boosting framework that uses tree-based algorithms and follows leaf-wise approach while other algorithms work in a level-wise approach pattern. The images below will help you understand the difference in a better way.

Before is a diagrammatic representation by the makers of the Light GBM to explain the difference clearly.

Level-wise tree growth in XGBOOST.

Leaf wise tree growth in Light GBM.

Leaf wise splits lead to increase in complexity and may lead to overfitting and it can be overcome by specifying another parameter max-depth which specifies the depth to which splitting will occur.

Below, we will see the steps to install Light GBM and run a model using it. We will be comparing the results with XGBOOST results to prove that you should take Light GBM in a ‘LIGHT MANNER’.

Let us look at some of the advantages of Light GBM.

Advantages of Light GBM

Faster training speed and higher efficiency: Light GBM use histogram based algorithm i.e it buckets continuous feature values into discrete bins which fasten the training procedure.

Lower memory usage: Replaces continuous values to discrete bins which result in lower memory usage.

Better accuracy than any other boosting algorithm: It produces much more complex trees by following leaf wise split approach rather than a level-wise approach which is the main factor in achieving higher accuracy. However, it can sometimes lead to overfitting which can be avoided by setting the max_depth parameter.

Compatibility with Large Datasets: It is capable of performing equally good with large datasets with a significant reduction in training time as compared to XGBOOST.

Parallel learning supported

Code:

Sample code for regression problem:

Parameters

num_iterations:

It defines the number of boosting iterations to be performed.

num_leaves :

This parameter is used to set the number of leaves to be formed in a tree.

In case of Light GBM, since splitting takes place leaf-wise rather than depth-wise, num_leaves must be smaller than 2^(max_depth), otherwise, it may lead to overfitting.

min_data_in_leaf :

A very small value may cause overfitting.

It is also one of the most important parameters in dealing with overfitting.

max_depth:

It specifies the maximum depth or level up to which a tree can grow.

A very high value for this parameter can cause overfitting.

bagging_fraction:

It is used to specify the fraction of data to be used for each iteration.

This parameter is generally used to speed up the training.

max_bin :

Defines the max number of bins that feature values will be bucketed in.

A smaller value of max_bin can save a lot of time as it buckets the feature values in discrete bins which is computationally inexpensive.

Last updated