Questions Metrics

1. What is precision and recall?

The metrics used to test an NLP model are precision, recall, and F1. Also, we use accuracy for evaluating the model’s performance. The ratio of prediction and the desired output yields the accuracy of the model.

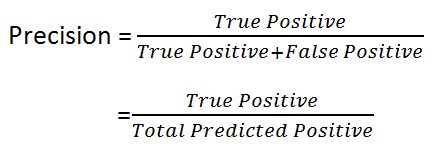

Precision is the ratio of true positive instances and the total number of positively predicted instances.

Indicates how precise or exact the model's predictions are, i.e., how many positive (the class we care about) examples can the model correctly identify given all of them?

Recall is the ratio of true positive instances and the total actual positive instances.

It measures how effectively the model can recall the positive class, i.e., how many of the positive predictions it generates are correct.

2. What is F1 score in NLP?

F1 score evaluates the weighted average of recall and precision. It considers both false negative and false positive instances while evaluating the model. F1 score is more accountable than accuracy for an NLP model when there is an uneven distribution of class. Let us look at the formula for calculating F1 score:

3. Accuracy:

When the output variable is categorical or discrete, accuracy is used. It is the percentage of correct predictions made by the model compared to the total number of predictions made.

4. AUC:

As the prediction threshold is changed, the AUC captures the number of correct positive predictions versus the number of incorrect positive predictions.

5.What do you mean by perplexity in NLP?

It's a statistic for evaluating the effectiveness of language models. It is described mathematically as a function of the likelihood that the language model describes a test sample. The perplexity of a test sample X = x1, x2, x3,....,xn is given by,

PP(X)=P(x1,x2,…,xN)-1N

The total number of word tokens is N.

The more perplexing the situation, the less information the language model conveys.

Last updated